Chiplets And New Tools Transform Chipmaking

Q1. Could you start by giving us a brief overview of your professional background, particularly focusing on your expertise in the industry?

I am Varadarajan Parthasarathy, PhD in Physics with applications in astronomical sciences. In the present, I am focused on domains such as semiconductors, high-performance computing, and artificial intelligence.

Q2. What are the key challenges in scaling domain-specific GPUs, ASICs, and accelerators for generative AI training and high-performance computing workloads?

One of the main challenges I see is managing power and thermal requirements. As power density increases at advanced nodes, cooling becomes a significant issue, specifically in dense server environments. Ensuring effective power delivery and heat dissipation is often a primary bottleneck.

Memory bandwidth and hierarchy are also critical. Large models and datasets put significant pressure on memory subsystems. In my experience, developing optimized high-bandwidth memory interfaces and new memory hierarchies is both complex and costly.

Scalability is another area that demands focus. Moving beyond a single chip means we need ultra-high-bandwidth, low-latency interconnects. Attaining effective parallelism across many accelerators is a significant systems challenge.

Software and programmability are just as important as hardware. Building robust software stacks that can fully utilize the hardware and are easy for developers to adopt is often more challenging than the hardware design itself.

Economic viability is always a consideration. The high non-recurring engineering costs at advanced nodes mean that only organizations with large market volumes can justify the investment.

Q3. Which emerging chiplet-based or heterogeneous integration techniques are gaining traction for custom AI accelerators, and what impact do they deliver on development cycles versus monolithic designs?

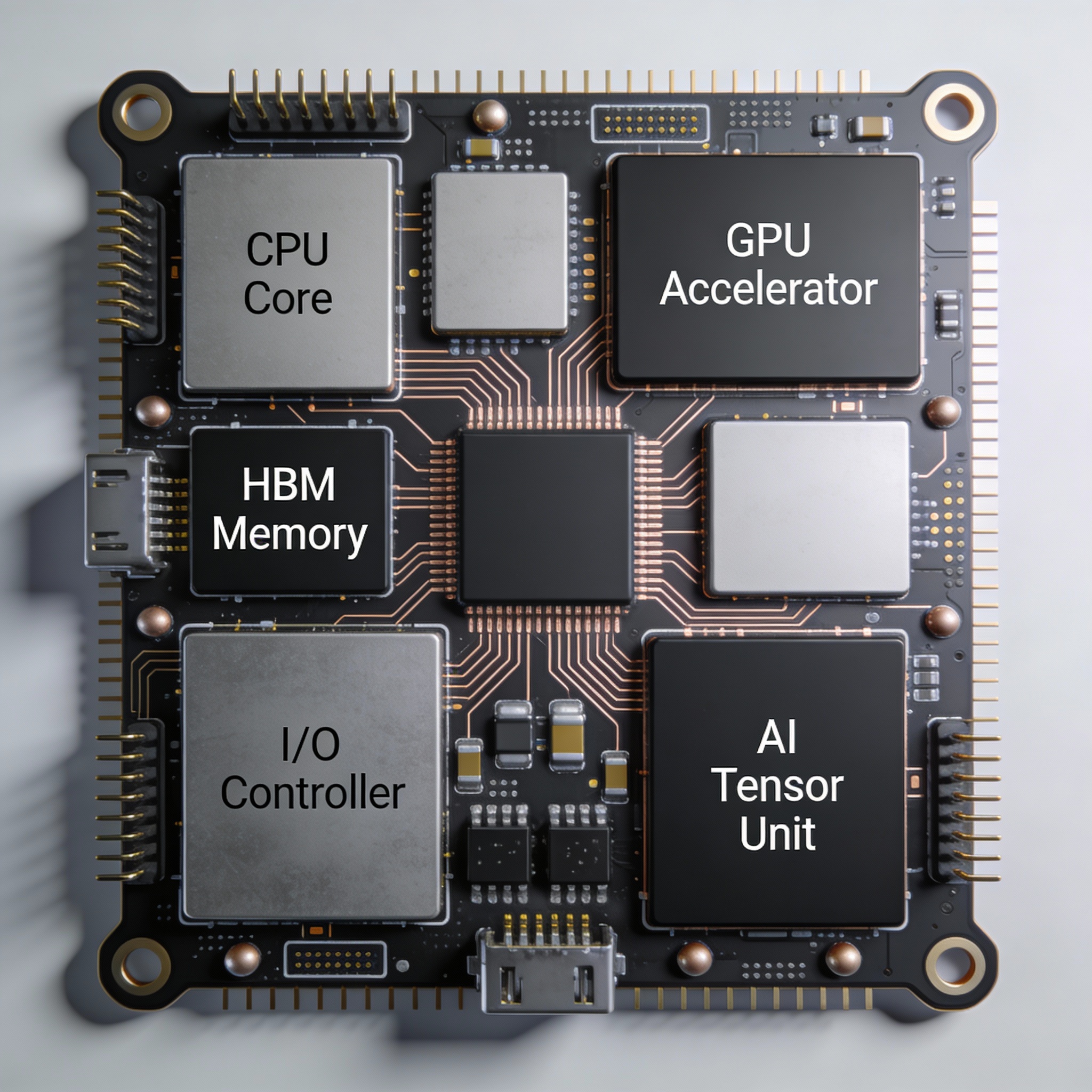

Universal Chiplet Interconnect Express (UCIe) is rapidly emerging as a critical open standard for enabling seamless connectivity between heterogeneous chiplets. With cutting-edge packaging technologies from leaders like TSMC and Intel, it’s now possible to tightly integrate logic, memory, and analog components within a single package, driving new levels of system performance and capability.

Impact on Development Cycle vs. Monolithic Approaches

Accelerated Time-to-Market & Cost Efficiency: By allowing designers to “mix and match” pre-validated chiplets—such as pairing a proprietary compute die with industry-standard I/O or memory chiplets—UCIe dramatically reduces the need for large, monolithic dies. This not only improves manufacturing yield and lowers costs but also enables incremental product upgrades and faster deployment cycles.

Greater Design Flexibility & Specialization: Chiplet-based architectures empower engineers to select the optimal process node for each function (e.g., compute at 3nm, analog at 12nm, memory on mature nodes), supporting integration of best-in-class IP from diverse sources with fewer barriers.

Development Cycle Trade-offs: While the initial architecture and co-design process become more complex, this approach significantly reduces the risk associated with full-custom chip tape-outs. For derivative or updated products, total development time can shrink from years to months, even considering the additional validation required for advanced packaging and interconnects.

Q4. How are advancements in C++/Python/Rust tooling enabling 2nm/3nm node optimizations for AI-HPC chips, and what supply chain risks could derail market growth?

Tooling Advancements

High-Level Synthesis (HLS) and Domain-Specific Languages: Modern chip design is getting a boost from C++-based HLS and Python domain-specific languages, which let engineers quickly test and iterate new micro-architectures. Instead of painstakingly writing all the RTL by hand, teams can explore designs tuned for the realities of advanced nodes—like dealing with electrical parasitics or how circuits age over time—much faster and with more flexibility.

Physical-Aware Co-Design: New tools now bring place-and-route and timing closure feedback much earlier into the design process. This means that even at the C++ or Rust logic development stage, engineers are already thinking about how their designs will actually get manufactured at cutting-edge 2nm or 3nm nodes, helping catch problems before they become costly.

Rust for Safety & Productivity: Rust is gaining attention for writing secure, reliable firmware and low-level control logic. Its strict memory safety and concurrency checks help prevent bugs and vulnerabilities, which is especially important in critical hardware systems.

Supply Chain Risks

Geopolitical Fragmentation: The global chip supply chain is facing growing risks from export restrictions and regional efforts to control advanced fab capacity. Legal hurdles and bottlenecks are becoming more common as TSMC, Samsung, and Intel expand regionally.

Limited EUV Tool Monopoly: ASML’s monopoly on EUV lithography tools means the world depends on a single supplier for key manufacturing equipment. Any disruption—whether in tool availability or throughput—can slow down the entire industry’s move to advanced nodes.

Specialized Material & IP Dependence: Production can grind to a halt if there are shortages of rare gases, specialty chemicals, or essential IP blocks like high-speed SerDes. These components are critical and often sourced from only a few suppliers.

Packaging & Test Capacity: Demand for advanced packaging technologies like CoWoS is soaring, but capacity is tight. As a result, packaging and testing are becoming new bottlenecks in the semiconductor supply chain.

Q5. What software profiling/debugging innovations are accelerating edge-to-cloud hybrid deployments?

Unified Observability Platforms: Tools that provide a single pane of glass for tracing an AI workload’s performance, power, and latency metrics as it moves from edge devices to cloud instances.

Deterministic Profiling and Digital Twins: Hardware-in-the-loop profiling with cycle-accurate software models (“digital twins”) of both edge accelerators and cloud GPUs to debug partitioning and synchronization issues offline.

Federated Debugging & Root-Cause Analysis: AIOps-driven tools that can correlate low-level kernel failures on an edge device with system-level orchestration logs in the cloud, identifying whether a fault is in data, model, hardware, or network.

Over-the-Air (OTA) Profiling Updates: Secure, low-overhead agents deployed on edge devices that can stream performance counters to the cloud for centralized analysis, allowing proactive optimization of deployed models.

Q6. In your view, which underserved generative AI/HPC sub-sectors offer the highest near-term opportunities for purpose-built accelerators? What customer needs are these accelerators uniquely positioned to address?

Scientific AI / AI-for-Science

In scientific AI, there is a need for custom accelerators that support mixed-precision and specialized math functions, such as those required for molecular dynamics or climate modeling. These accelerators provide the precision and capabilities that general-purpose AI chips often lack.

Generative AI for 3D & Multimedia Content Creation

Needs Addressed: Real-time diffusion models for texture/3D model generation, neural radiance fields (NeRF), and video synthesis demand immense bandwidth for high-resolution tensors and ray-tracing-like operations. Accelerators with massive on-chip SRAM and hardware-accelerated sampling/rasterization can uniquely serve media/entertainment and game development studios.

Real-Time AI Inference in Robotics and Autonomous Systems

In robotics and autonomous systems, purpose-built accelerators that combine vision processing, sensor fusion, and control networks on a single chip are needed to meet strict requirements for latency, reliability, and real-time operation.

Privacy-Preserving and On-Device AI

Needs Addressed: Growing demand for fully homomorphic encryption (FHE) and confidential computing at scale. Accelerators with hardware for polynomial multiplication and modular arithmetic can make encrypted AI training/inference practical, addressing regulatory and privacy needs in healthcare and finance.

Q7. If you were an investor looking at companies within the space, what critical question would you pose to their senior management?

If I were evaluating companies as an investor, my primary focus would be on the potential for strong returns. I would look for organizations that are well positioned to deliver reliable and high-value outcomes, ensuring that my investment is justified.

Comments

No comments yet. Be the first to comment!